3 Key Insights for the 2026 Health AI Horizon

The gap between AI’s promise and its practice in healthcare has never been more apparent. In 2025, while headlines celebrate breakthroughs in medical AI, health systems worldwide grapple with a more complex reality: how do you actually implement these technologies at scale? Together with Google for Health, the Digital Medicine Society (DiMe) surveyed 2,041 healthcare leaders across 90 countries—29% clinicians, 21% technology or data professionals, 13% executives or administrators, and 38% spanning operations, research, education, policy, and patient engagement—to understand where organizations truly stand on AI adoption. What we found revealed both momentum and friction: confidence gaps across roles, workflow integration barriers, and an urgent shift in priorities toward governance, clinician engagement, and coordinated change management.

Health professionals don’t need more AI tools. They need stronger systems for using them well.

In partnership, DiMe and Google for Health translated these findings over the course of 2025 into actionable resources designed to help organizations move from experimentation to sustainable impact including The Digital Medicine AcademyⓇ Health AI education courses and The Playbook: Implementing AI in Healthcare. These tools are grounded in real-world evidence and built by hundreds of expert collaborators.

——

But every organization’s journey is different. This report gives you both the landscape view and a local lens. We begin with what the data revealed about confidence, barriers, and priorities across health systems worldwide. Then, we guide you through considering how these gaps may be addressed via health professional education, turning insights into action and readiness into results.

Key insight #1 | The “confidence-competence” gap

68% of respondents did not feel “very confident” using or evaluating AI tools

We often assume the barrier to AI is “learning to code” or “understanding the tech.” The survey data reveals a different, more dangerous gap.

- Healthcare professionals are excited but lack confidence. While enthusiasm is high, critically evaluating AI systems consistently ranked as the lowest-confidence area across almost every role. Over two out of every three respondents overall did not feel “very confident” using or evaluating AI tools.

- The implication: We’re deploying AI into workflows faster than we’re equipping people to evaluate these new tools effectively. At the same time, validated metrics to assess competence are lacking. This is a patient safety crisis in the making, and it’s the #1 signal for curriculum design.

Key insight #2 | The barriers aren’t technical—they’re human

The survey reveals that the biggest barriers to AI adoption lie less in the technology itself, and more in how people understand, trust, and apply AI in real workflows.

The top barriers cited across roles included: Workflow integration (72%), unclear leadership direction (68%), limited staff capacity/training (61%)

Patterns in respondents’ confidence levels help illustrate how these barriers manifest differently across roles.

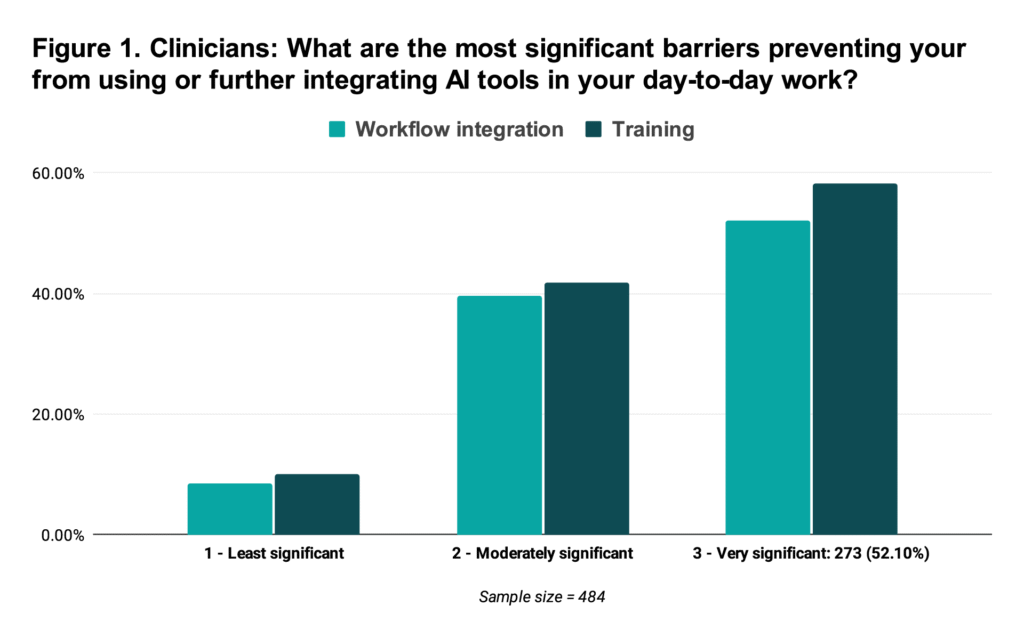

*Scores below represent the average significance rating on a scale of 1 (Least significant) to 3 (Very significant)

The Clinician

- Reported friction: Clinicians are being told to “use AI,” but 30% of respondents reported not using AI at all. Of the clinicians that use AI, 91% rated integration difficulty as either moderately or very difficult. Similarly, 86% rated insufficient training as moderately or very difficult (Figure 1).

- Potential fix: Practical, competency-based training that translates academic AI concepts into everyday use—accelerating the ability to effectively leverage AI and optimize workflow integration.

The Healthcare Executive

- Reported friction: Healthcare executives report significant uncertainty around governance. 87% list the “lack of guidelines” is a moderately or very important barrier. “Resource allocation” is similar–88% rate it as a moderately to very important barrier to AI adoption.

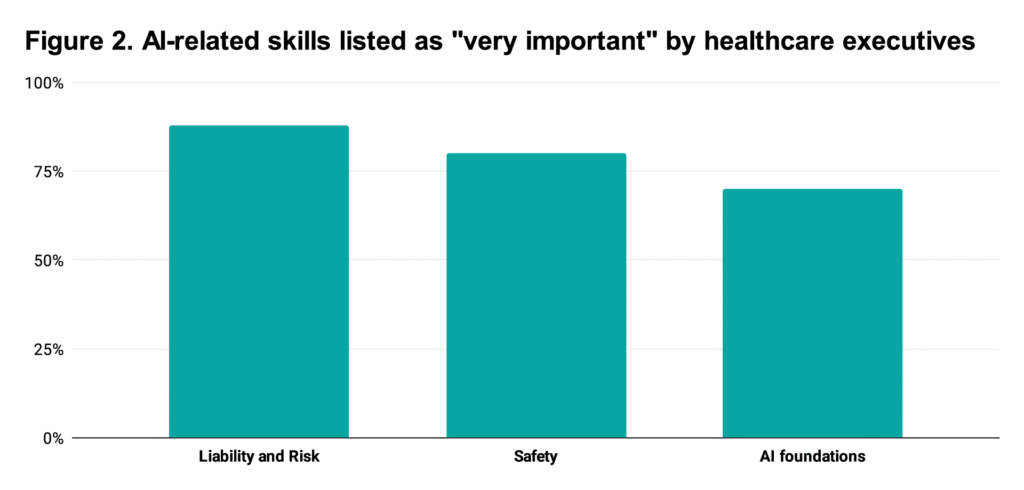

- “Liability and risk”, “safety”, and “understanding AI foundations” were listed amongst the most important skills by executives (Figure 2).

- Potential fix: Strategic governance training resources, like DiMe’s Playbook. Focus on governance, liability, evaluation of vendor claims, and change management.

The Educator

- Reported friction: Despite educators ranking “Personalized education tools” as their #1 most reported use case, they were the second least confident group “Using AI in workflows”. This suggests that while educators are experimenting with AI tools, they lack the foundational confidence to explain these workflows or teach them effectively. If educators are using tools they don’t feel confident explaining, the pipeline for AI-ready health professionals risks being built on shaky ground.

- Potential fix: “Train the Trainer” programs to accelerate AI literacy among educators. Integrate AI training with interactive workshops, and provide hands-on practical training with plenty of space for questions and discussion.

Key insight #3 | AI readiness depends on role-specific, competency-based education

Across clinicians, executives, educators, and operations teams, the survey reveals a shared message: AI adoption stalls when people lack the skills, confidence, and clarity to use AI in their actual workflows.

So what does this mean for health systems building AI competency? These role-specific barriers point to role-specific solutions, and the data makes clear what each group needs to build competency and confidence.

The curriculum roadmap

Below is a sample roadmap for educators, based on these findings.

| Track | The audience | The barrier | The need |

|---|---|---|---|

| The critical appraiser | All clinicians | “I don’t know if I can trust this output.” | AI vigilance & safety. Topics to include: Verifying AI outputs, bias auditing, and “human-in-the-loop” protocols. |

| The workflow architect | Ops & support staff | “It takes too long to log in and use.” | Applied AI integration. Topics to include: Prompt engineering for clinical documentation, EHR integration, and workflow optimization. |

| The governance leader | Execs & admin | “We don’t have a policy for this.” | Responsible AI strategy. Topics to include: Governance and regulatory frameworks, procurement resources, and ethical considerations. |

From insights to action

Together, these insights tell a consistent story: if AI maturity was about acquiring tools in 2025, 2026 is about building trust, clarity, competency, and confidence across every role. That shift demands stronger systems, governance, and workforce readiness than most organizations have in place today.

DiMe and Google for Health translated these findings into actionable resources, including The Playbook: Implementing AI in Healthcare and a series of education courses from the DiMe Academy®.

Our goal for 2026 is to empower every health system to move beyond isolated pilots toward a coordinated, evidence-driven AI production at scale.

Join us in the work ahead to build stronger AI systems that allow health systems and health professionals teams to use them well:

- Care navigation frameworks that put AI into patient-centered context

- Lifecycle monitoring and learning health systems that keep trust grounded in evidence

- Education and competency models that empower all roles.