DIME PROJECT

Implementing AI in Healthcare

Monitor for value

AI systems aren’t static. Your monitoring approach shouldn’t be either.

With the right people, systems, and processes in place, a lifecycle monitoring approach helps ensure your deployment is grounded in:

- Patient safety: Detect performance degradations before they affect clinical outcomes

- Financial accountability: Validate ROI and optimize your investments

- Regulatory compliance: Meet evolving FDA and accreditation requirements

- Scaling readiness: Build evidence to support broader organizational adoption

During monitoring, you will…

-

Monitor model performance

Monitor model performance -

Track clinical outcomes and measures of success

Track clinical outcomes and measures of success -

Govern with accountability and compliance

Govern with accountability and compliance -

Assess performance and realize total cost of ownership

Assess performance and realize total cost of ownership -

Define scale readiness criteria

Define scale readiness criteria

The deployment of a tool marks the true beginning of your AI journey. This phase transforms your organization into a Learning Health System where continuous monitoring, optimization, and evidence generation drive sustained value. For decision-makers, clinical leaders, and IT leaders, The Playbook’s Monitor section provides an actionable framework to help you account for how models behave in the real world, how inputs shift over time, and how these shifts affect patient care.

Safeguard Against Model Drift and Decay

AI is not a “set it and forget it” technology. Models change — sometimes in obvious ways, often silently.

- Data drift: Patient populations, workflows, coding practices, and clinical guidelines evolve. Inputs shift, and predictions degrade.

- Model drift: Even with stable inputs, underlying relationships shift, leading to a slow, hidden drop in accuracy.

- Generative risk: LLMs can confidently produce incorrect or biased outputs, compounding clinical and reputational risk.

Without disciplined, ongoing monitoring, these shifts create cascading risks:

- Clinical: Incorrect recommendations, missed diagnoses, safety events.

- Equity: Hidden bias compounds disparities in vulnerable populations.

- Operational: Broken workflows erode trust and adoption.

- Regulatory: Noncompliance can stall or shut down deployments.

Build monitoring into your AI strategy from day one. Define performance thresholds, invest in alerting and retraining pipelines, and hold vendors accountable for transparency. A model’s value is only as strong as your vigilance in keeping it accurate, safe, and compliant.

Monitor model performance

Predictive and generative tools require fundamentally different monitoring strategies.

A predictive AI model for sepsis is monitored for accuracy (AUROC, precision, recall), false positives, and data/model drift. Periodic checks and real-time alerts surface performance degradations and trigger your governance committee to act.

How do I monitor generative chatbot performance?

A generative chatbot is monitored for hallucinations, toxicity, latency, and prompt-response alignment. Monitoring includes manual reviews, patient ratings, and red-teaming for unsafe outputs.

Predictive AI

(e.g., sepsis alerts, readmission risk)

Primary Risk

Model drift → wrong predictions

Key Metrics

AUROC, precision, recall, calibration

Monitoring Mode

Periodic batch performance checks + real-time alerts

Failure Signals

Rising false positives or degraded sensitivity

Generative AI

(e.g., GPT-enabled documentation, chatbots)

Primary Risk

Hallucinations → confident but incorrect outputs

Key Metrics

Hallucination rate, toxicity, accuracy vs. reference

Monitoring Mode

Live content red-teaming + continuous RLHF loops

Failure Signals

Unsafe recommendations, bias propagation, off-label responses

A minimum monitoring stack

Data & Input

Goal: Detect drift or invalid inputs before they impact outputs.

Key Components:

- Daily input validation

- Drift detection

- Missing data alerts

Model Performance

Goal: Track real-world AI performance versus baseline.

Key Components:

- Core metrics (AUROC, precision/recall, calibration)

- Equity stratification (race, gender, age, language)

- Generative AI: hallucination, toxicity, relevance

- Threshold monitoring (alert on deviation)

Operational & Clinical Impact

Goal: Ensure AI improves workflows and patient outcomes.

Key Components:

- Usage metrics (adoption, override rates)

- Workflow KPIs (time saved, bottlenecks)

- Outcome tracking (readmissions, LOS, diagnostic accuracy)

Governance & Escalation

Goal: Define accountability and rapid response for anomalies.

Key Components:

- Named model steward

- AI Safety & Performance Board (CMIO, CDO, IT, Quality/Safety)

- Escalation playbook: pause → recalibrate → retire

- Audit logging for compliance

Algorithmovigilance in Health AI

Predictive algorithms can amplify existing healthcare inequities, but debiasing strategies can improve fairness without sacrificing performance. As AI tools rapidly enter clinical practice, continuous monitoring and equity-focused oversight are becoming non-negotiable.

- Real-world healthcare data often bakes systemic inequities into AI models.

- Debiasing methods work, but removing race alone isn’t enough to fix disparities.

- Health systems need algorithmovigilance—continuous, equity-aware monitoring of deployed AI tools.

Learn how Vanderbilt University Medical Center is applying algorithmovigilance from Dr. Peter Embi

Red-teaming LLMs

A recent study highlights the first large-scale clinical red-teaming effort to expose risks in GPT-based models used for healthcare tasks. Across 1,504 responses from GPT-3.5, GPT-4, and GPT-4o, 20% were inappropriate, with errors spanning safety, privacy, hallucinations, and bias—underscoring the need for rigorous testing before clinical deployment.

- 1 in 5 model outputs were unsafe or inaccurate, even in advanced GPT versions.

- Racial and gender biases, hallucinated citations, and privacy breaches were common.

- Clinician-led red teaming is essential to benchmark risks, guide safe adoption, and build trust in AI-driven care

- Patients and care partners weren’t included in this testing, but their input is critical. If you’re deploying patient-facing chatbots or LLM tools, include real users early in red-teaming to catch usability gaps, unclear language, and missed contextual cues that technical and clinical reviewers may overlook.

Performance evaluation of predictive AI models

A framework of 32 performance measures across five domains—discrimination, calibration, overall performance, classification, and clinical utility— outlines which metrics are most appropriate for assessing models intended for medical practice. The authors stress that improper measures can mislead clinicians and developers, potentially resulting in patient harm and higher costs. They recommend prioritizing measures that are proper, interpretable, and aligned with clinical decision thresholds.

Core performance domains

- Discrimination – Can the model separate patients with vs. without the event?

Recommended metric: AUROC (Area Under ROC Curve). - Calibration – Do predicted probabilities match observed outcomes?

Recommended: Calibration plots + slope/intercept assessments. - Overall Performance – How close are predictions to reality?

Recommended: Brier Score (scaled) and related R² measures. - Classification – How accurate are predictions at a decision threshold?

Caution: Accuracy and F1 are often misleading in clinical settings. - Clinical Utility – Does the model improve patient care decisions?

Govern with accountability and compliance

Lean on your governance committee to establish a formal, governed process for updating, retraining, and redeploying AI models to ensure they remain accurate, safe, and effective as clinical practices and data evolve.

![]() PRO TIP

PRO TIP

Model retraining is not a simple software update; it is the creation of a new clinical tool that requires the same level of validation and oversight as the original. A disciplined lifecycle management process prevents the introduction of new risks and ensures that improvements are deployed safely and seamlessly.

- Define thresholds: Establish clear clinical and statistical thresholds that trigger a model retraining process. Triggers may include data outputs like performance degradation or data drift or data inputs like changes in clinical guidelines.

- Establish re-validation protocol before deploying a retrained model, often identical to the one used for the initial deployment.

- Implement formal change control for deploying new model versions.

- Maintain a model version registry, including training data, validation reports, and period of use.

Beyond Deployment: The Imperative for AI Governance and Monitoring in Healthcare

Dr. Susannah Rose from Vanderbilt University Medical Center emphasizes that most health systems are adopting AI rapidly but lack the governance and monitoring infrastructure to ensure these tools remain safe, effective, and trusted over time. Without disciplined oversight, risks like model drift, unintended use, and patient harm escalate quickly.

Key Takeaways:

- Governance Gap: Most health systems lack robust frameworks for continuous monitoring, leaving AI tools vulnerable to performance degradation and misuse.

- Dynamic Risk Management: AI models require lifecycle oversight, not one-time validation — each retraining is effectively a new clinical tool.

- Patient Trust & Transparency: Consent strategies must balance ethics and practicality, focusing on risk level, autonomy, and patient-facing impact.

- Actionable Blueprint: Vanderbilt’s approach combines human-led governance, real-time monitoring, and rapid response workflows to mitigate emerging risks.

- Collaboration Imperative: No single organization can manage this alone — cross-system knowledge sharing and shared monitoring practices are critical.

Compliance requirements are also emerging fast. Your governance committee should stay up to date with:

FDA

The AI/ML SaMD framework increasingly emphasizes post-market monitoring for adaptive algorithms.

CMS & Joint Commission

Moving toward expectations for algorithm transparency and ongoing performance validation.

Legal Exposure

If AI contributes to harm, and you weren’t monitoring, liability is harder to defend.

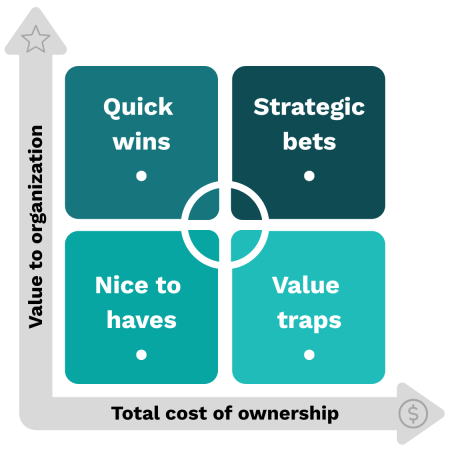

Assess performance and realize total cost of ownership

Ensure the AI tool delivers on its initial financial promise and that its ongoing costs are justified by its realized value. This activity closes the loop on the business case established during planning and helps you assess the total cost of ownership.

Success isn’t just clinical or operational; it must be financially sustainable and drive value in your organization.

Click + for details →

Quick wins

Example: AI-driven scheduling optimization that reduces no-shows 20% with minimal IT overhead.

Strategic bets

Example: Imaging AI that requires major EMR integration but significantly improves diagnostic accuracy.

Nice to haves

Example: AI transcription tool that saves a few minutes but doesn’t materially change outcomes.

Value traps

Example: AI chatbot that adds little clinical value but requires constant retraining and patient complaint handling.

Acquisition Costs

- Software or model licensing (subscription, per-user, per-case)

- Hardware and cloud infrastructure required for deployment

- Integration with EHRs and other clinical systems

- Initial training and onboarding

Implementation Costs

- Project management and workflow redesign

- Data preparation, cleaning, and annotation

- Security and compliance validation

- Change management for clinical and operational teams

Operational & Maintenance Costs

- Ongoing cloud/compute costs and storage

- Monitoring, auditing, and model retraining

- IT support and service desk overhead

- Performance tracking and continuous improvement initiatives

Indirect Costs / Opportunity Costs

- Staff time diverted from other initiatives

- Productivity impact during onboarding or model adjustments

- Risk mitigation measures (insurance, legal review)

- Potential regulatory fines for misimplementation

Your guide to estimating Total Cost of Ownership (TCO)

Baselines Scope

- Define the number of workflows, sites, and end-users.

- Identify whether deployment is centralized or distributed.

Map cost drivers

- Assign costs to each category (direct and indirect).

- Include short-term (year 1) and long-term (3–5 year) projections.

Factor in scale and complexity

- Multiply per-unit costs by number of users/patients.

- Include incremental costs of adding new sites or models.

Add contingency

- Typically 15–25% for unforeseen regulatory, technical, or workflow issues.

Compare against value metrics

- Clinical outcomes improvement

- Operational efficiency (time saved, throughput gains)

- Financial return (reduced adverse events, reimbursement optimization)

- Equity & patient experience improvements

Map your estimates into the following four quadrants to gauge its value to your organization.

Define scale readiness criteria

Despite astronomical investments, most AI pilots do not scale. The core barrier isn’t model quality, infrastructure, or regulation, but workflow integration and readiness planning.

You’ve deployed the system, tracked outcomes, and monitored performance in the real world. Scaling should be a reward for success, not a workaround for incomplete evidence. Your decision to scale AI throughout the enterprise comes to two distinct paths. Each path demands different readiness checks to avoid introducing clinical, operational, and compliance risks at scale.

Path A: Expanding Usage

Rolling out an existing AI tool to more people (clinicians, staff, or patients). For example, expanding an AI-driven sepsis alert from 1 hospital unit to 10 hospitals in your system.

Path B: Expanding the Stack

Introducing additional AI tools into your environment. For example, deploying a generative documentation assistant alongside predictive risk models

Scale readiness checklist

Path A: Expanding Usage

- Proven clinical efficacy at pilot sites (validated against KPIs and patient outcomes)

- No unresolved patient safety issues

- Override rates and clinician feedback reviewed — no workflow “landmines”

- Adequate clinician training materials and quick-reference guides in place

- Minimum monitoring stack operationalized (data, model, operational metrics)

- Threshold-driven alerting tested and reviewed with the AI Safety & Performance Board

- Retraining, revalidation, and rollback protocols documented

- Demonstrated ROI at pilot scale

- TCO assesses for additional user licenses, support, and infrastructure,etc.

- Scaling plan aligns with enterprise AI roadmap and budget approvals

Scale readiness checklist

Path B: Expanding the Stack

- Dedicated AI governance framework that scales across multiple tools

- Standardized intake process for evaluating new vendors and models

- Risk stratification framework (clinical, operational, financial, reputational) established

- IT confirms infrastructure capacity (compute, storage, latency tolerances)

- Integration pathways tested — tools don’t compete for workflow space

- Data interoperability ensured across tools to avoid conflicting insights

- Baseline algorithmovigilance processes in place across all models

- Equity-focused performance monitoring operationalized for each tool

- Red-teaming performed on any patient-facing generative AI tools

Next steps

Implementing AI in hospitals is a strategic commitment to delivering smarter, safer, and more equitable care. Health systems that succeed don’t treat AI as a one-off deployment; they treat it as a capability that matures through clear leadership, accountable infrastructure, and continuous learning.

But here’s the reality: pilots fail to scale because organizations underestimate the complexity of workflow integration, governance, and change management. A successful deployment is your initial proof. The next step is Scale: turning early wins into sustained, system-wide impact. Done right, scaling AI drives operational efficiency, financial sustainability, and better patient outcomes while establishing your organization as a leader in responsible, evidence-based innovation.

That’s where you go next.